As practices evaluate staffing and patient flow patterns, technicians are increasingly expected to perform diagnostic testing and are increasingly required to wear more hats. This makes the technician more valuable to the practice, but only if properly trained.

We often think of proper training in terms of producing data, but it is much more. Fundamentally, the operator must:

- understand how the device produces data

- recognize artifacts and know how to remedy them

- be able to explain the procedure to the patient to solicit cooperation and to produce quality data.

This is no small order. In my more than 20 years of experience as an instructor, I find most technicians have some of these qualities, some have most of these qualities, and few have all of these qualities. This is, in no way, a reflection on them as professionals, but rather on the quality of training they received initially and their continuing education. It is also an acknowledgment that while it may be ideal to have dedicated imagers whose focus and training is limited to diagnostic testing, it’s not always feasible: Today’s cross-trained technicians wear a number of hats and may receive training in any number of clinical and business area, in addition to imaging.

This article will discuss the benefits of well-trained technicians, those team members who abide by the “do it right the first time,” or DRIFT, concept of testing.

The problem with poor-quality data

Having well-trained staff allows for more autonomy within the practice, as clinicians can trust technicians to know what data is needed and how to produce the absolute best data, image and/or calculation needed. The problem with producing poor-quality data is that it is time consuming, as the patient and technician may have to return to the device so that the procedure can be performed again, but also that — in this hectic, busy world — poor data may be missed and accepted as quality data.

Most diagnostic devices are technically dynamic, and the end result the clinician sees is a static capture of that dynamic test. This process trusts that the technician captured the best quality image, meaning an image that gives the clinician needed information. Clinicians use this data to formulate a diagnosis and/or treatment plan. Providing them with poor information may lead to a wrong diagnosis or incorrect treatment.

The value of consistency

Diagnostic testing, regardless of the setting, should be treated with the same meticulousness as clinical trials. Every user needs to perform testing in the same manner, every time. This standardization is the only way to ensure proper, quality data that is repeatable. If a technician performs a diagnostic test on a patient’s baseline visit, then another technician performs the same test differently on a follow-up visit, then the clinician is not evaluating the end data with the same parameters. It’s comparing apples to oranges. This responsibility falls on the technician to work with the best ethical standards, and the practice to initiate and maintain protocols.

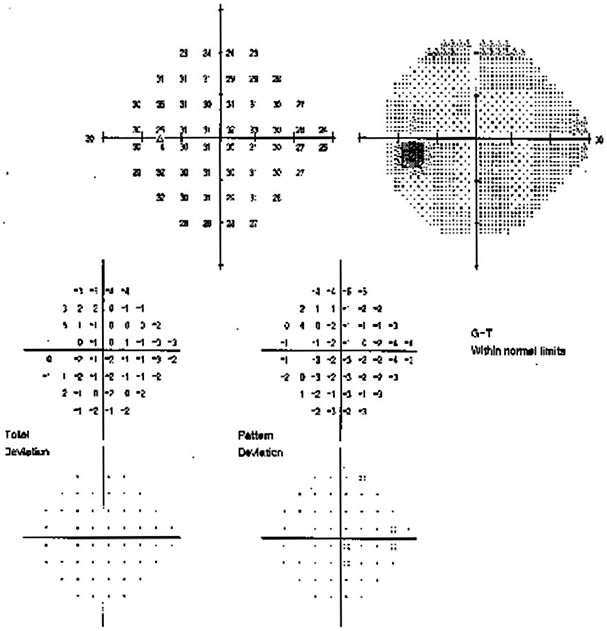

Let’s look at an example

A patient is referred as a possible glaucoma suspect. Technician A performs a visual field, using the patient’s best corrected acuity refraction. The patient follows up in 6 months, and Technician B performs a visual field, this time not putting the correct lens in place, and not paying attention to vertex distance. The end result is that the visual field appears more constricted and areas of stimulus are missed.

One of two things may happen at this point: The clinician may recognize a significant difference in the visual field from the previous test and request to have the test repeated. This wastes practice time, tech time, patient time and elevates overall cost for the practice. Or, the clinician may not consider poor technique, and may diagnose worsening glaucoma disease. Both of these scenarios may put the patient and practice at risk and can easily be avoided with strong practice standards that are enforced.

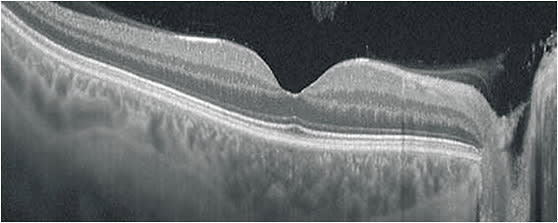

The value of fundamentals: an OCT scenario

Another very common problem I have witnessed is variable results in optical coherence tomography (OCT) imaging. Since OCT was introduced to ophthalmology, it has become the most widely utilized diagnostic imaging device in ophthalmology. Device manufacturers have streamlined the acquisition process as best they could, but without proper training, a technician may not produce quality images.

For example, in the case of OCT, it is critical to understand that near-infrared light is used to document tissue. The user who understands how OCT produces an image will know that anything affecting light will affect the OCT image. This principle applies to dry eye, cataract, and other factors that influence the media that the light passes through. Recognizing ocular anatomy and understanding how pathology affects a patient’s fixation are basic tools needed to produce quality images.

In addition, note that a clean, crisp image is not necessarily indicative of a quality image. Proper placement and repeatability of a scan is the foundation of a quality image. This applies to retina scans as well as glaucoma scans. OCT devices are incredibly sensitive, and can measure change to a micron, but if the scans are not repeated in an exact, standardized fashion, it doesn’t matter how good the scan looks. The data will be false and could be used by the clinician to stop or initiate a treatment that the clinician otherwise would not do.

Using all the tools

The technician who has all the tools necessary to perform quality diagnostic testing will exhibit confidence, gain trust from the clinician, and instill a sense of pride that they are producing the absolute best data possible, making them a critically important part of the chain of patient care. So, do it right the first time and become a DRIFTer. OP